AI.Society: Robotics and AI applications of the future

Exhibition and dialogue platform

By humans for humans

Whether private or professional lives; social or economic aspects: Robotics and artificial intelligence have fundamentally changed our lives – and will continue to do so. Which areas will see the greatest impact of these innovative tech-nologies? What new applications will we benefit from in the future?

In addition to concrete applications for work environments, healthcare, mobility, and environment, AI.Society also sheds light on ethical issues in the field of robotics and AI. Because the associated social transformation brings about both opportunities and new challenges. This makes it all the more important to carefully shape this transformation process. Representatives from science, business, politics, and society will jointly develop visions and approaches on how this process can contribute to creating a future worth living. A future that always considers society.

The focus topics

The Future of Work

Nothing influences and changes the field of work as profoundly as the development of new technologies and production resources. However, that has now started to change. For example, research experts at the Technical University of Munich (TUM) are now taking a people-centered approach in their vision of the factory of the future. This is not about the replacement or disenfranchisement of humans by technology, but about strengthening their skills, expanding their craftsmanship, and creating a safe working environment.

The interdisciplinary Work@MIRMI network includes more than 20 institutes from the faculties of Mechanical Engineering, Computer Science, Automation, Electrical Engineering, Construction, Geography, Environment, Sports, and Health. It conducts research into challenges such as demographic change, lack of specialists, climate change, and Europe's competitive position on a global scale. One of its lighthouse projects: the KI.FABRIK of the Bavarian High-Tech Agenda, which is to be implemented by 2030 to provide resilient and profitable facilities producing state-of-the-art IT and high-tech mechatronics components. AI.Society offers deep insights into various showcases.

SHOWCASES WORK 2025

We present a system that allows people to teach robots new tasks, without programming knowledge. Users simply show the robot how to do something in a basic setting. The system then analyzes the human demonstration and creates a flexible sequence of actions (called "skills") that the robot can learn. These skills can later be reused in more complex situations. This approach makes robot training faster, more intuitive, and accessible to people without technical backgrounds.

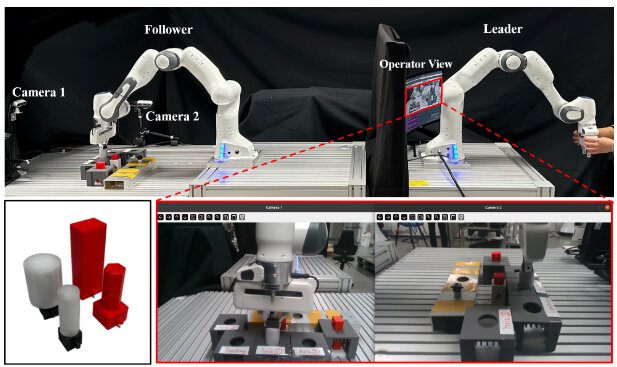

We showcase a system that supports humans in remote-controlled industrial tasks using a digital twin—a virtual copy of the real setup. This digital twin is combined with artificial intelligence, specifically reinforcement learning, to automatically learn “virtual guides” (called Virtual Fixtures). These guides help human operators by making it easier and safer to perform precise tasks like assembling components from a distance.

Our demo introduces the concept of collective learning—where robots learn together and share their knowledge. A short, engaging video will explain how this process works in simple terms. Visitors can then see two real robots in action, demonstrating how knowledge gained through collective learning can be applied to remote-controlled (teleoperated) tasks.

A Digital Twin is a virtual copy of a real-world system that allows us to monitor, analyze, and improve performance in real time. In this demo, we show how our Digital Process Twin is used in a BMW production scenario. The system connects engineering and live data using tools like the Asset Administration Shell, Microsoft Azure, and Omniverse. Visitors can try it themselves by placing custom orders through the Central.AI platform and watching the production progress digitally.

Today’s growing demand for customized products requires production lines to quickly adapt. In this demo, we present an AI-based planning system that helps organize production tasks more efficiently using smart data structures called “ontologies.” The flexible assembly station includes a robotic arm with four different grippers and is designed for small-scale, personalized manufacturing. A special feature of the demo: if a gear part is missing, either a worker or a visitor can hand over the missing part, and the robot will immediately continue with the gear assembly.

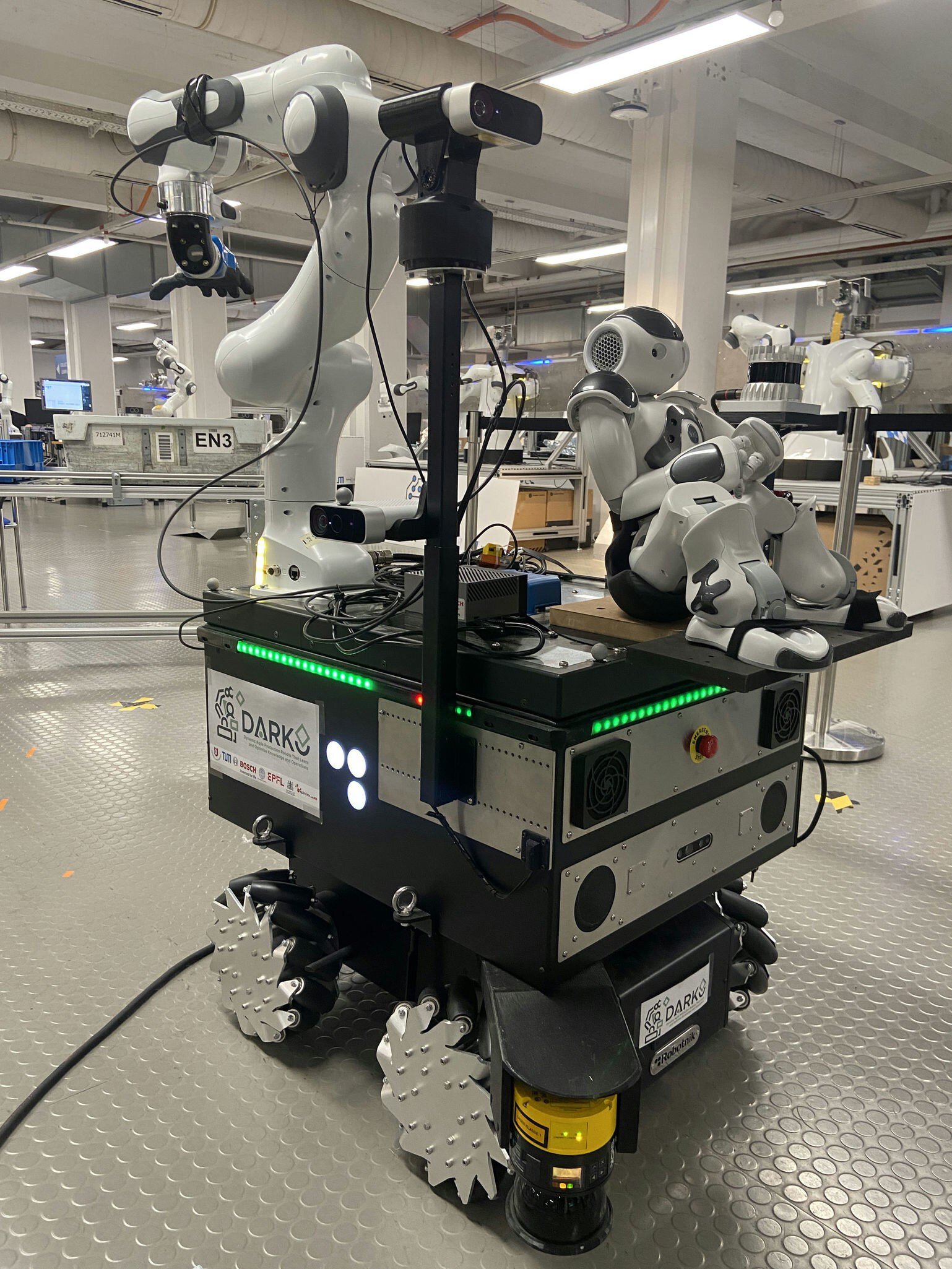

In this demo, two robots work together to handle complex tasks. The MiR robot is in charge of transporting items and moving safely through the environment. It can also manage flexible and bendable objects like cables and wires, which are difficult to handle automatically. The DARKO robot works alongside MiR, focusing on picking up and manipulating these objects with precision.

This demo shows how multiple robots can work together to install a cable—something that’s still mostly done by hand in today’s factories. First, a robot selects the correct cable from a pile of different ones. Then, robotic arms carefully pass the cable between each other, coordinating the movement. Finally, the robots mount the cable with precision, ensuring it is securely attached. Handling soft, flexible materials like cables is a big challenge for automation. With this setup, we demonstrate how robots can take over such tasks in manufacturing.

In mass production, design and engineering costs are spread over many identical products. But in modern Industry 4.0, where customers want unique, customized items, these costs become a major challenge. Our demo shows how Generative Artificial Intelligence (AI) can help. By using advanced image-generation models and smart feedback techniques, the system quickly creates and improves product designs—step by step—together with a human designer. The designer gives input, the AI proposes changes, and this loop continues until a final design is ready. This method is ideal for “Batch Size 1” production, where every product is different. Once approved, the design can be directly 3D-printed and used.

This demo features a mobile robot that can move around safely, work with people, and interact with its surroundings. In a practical example inspired by industry, the robot will navigate to a target location, pick up an object, and throw it to another spot using an elastic gripper and a custom air-powered tool. The throwing action shows how robots can handle dynamic tasks with precision. An anthropomorphic robot figure—acting like a human driver—will be present to demonstrate human-robot interaction. A second robot platform will demonstrate improved throwing performance thanks to a specially developed flexible robotic arm.

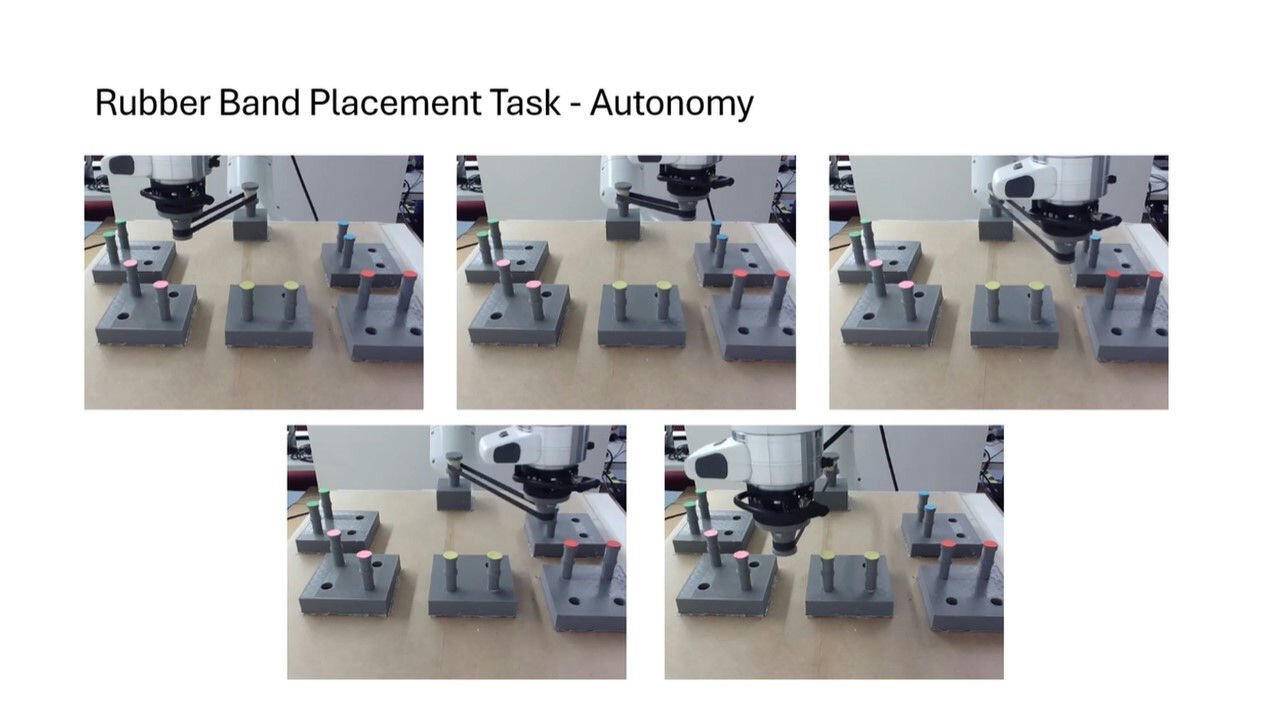

This demo presents a remote teaching platform where a robot learns a precise task—placing a rubber band. The human controls the robot from a distance using a system that provides touch feedback, even under simulated internet delays. The robot then uses what it has learned to repeat the task on its own. The goal is to show how robots can learn from remote demonstrations, even during communication delays. The system follows the IEEE P1918.1.1 standard and uses advanced methods to ensure safe and realistic control.

DeepSynergy.AI presents an innovative AI-based solution for smarter production planning. This demo shows how the system can automatically create and optimize complex production schedules by analyzing real-time factory data. The software helps manufacturers respond faster to changing demands, avoid delays, and make better use of resources.

Our exoskeleton is a game-changer! Using strong steel cables powered by electric motors, it supports the user’s arms during physical tasks. A soft, textile-based structure also helps support the back. This smart system is designed to reduce strain and fatigue for people working in logistics and manufacturing. It helps workers stay healthy, feel better, and stay productive throughout the day.

Come visit our booth and see the demonstrator live in action!

At our booth, we will present an exciting prototype: a lower-body humanoid robot that can stand—and ideally walk. Our goal? To build the world’s fastest humanoid robot! Inspired by the groundbreaking BirdBot from the Max Planck Institute, our robot uses a unique leg design based on nature. This allows for fast, energy-efficient movement, even outdoors on uneven ground. Unlike other robots made for indoor use, ours is built for real-world challenges like rescue missions, logistics, and human mobility. With smart AI control and reinforcement learning, we train the robot to move smoothly and adapt to complex environments. Our vision: a robot that can sprint 100 meters at over 15 km/h.

This demo showcases a bipedal robot that can walk and perform tasks using learning-based control. As part of the AI.Society space, the robot will move autonomously through the main walkway and interact with visitors in engaging ways. It can carry a basket to distribute flyers about MIRMI projects or hand out 3D-printed gifts like keychains or bottle openers with the MIRMI logo. Visitors can also ask the robot to guide them to specific booths at the exhibition.

The Future of Mobility

Mobility moves us all – quite literally – including robotics and AI.Society. Sustainable and flexible mobility solutions will shape our future. Autonomous vehicles, interconnected mobility, flying robots, dynamics modeling, and collision detection are essential components of mobile robotics. In addition, AI makes it easier for us to access new forms of mobility and helps us eliminate human error.

SHOWCASES MOBILITY 2025

We've developed a semi-automated cycle rickshaw that offers a fresh approach to urban mobility. Unlike typical automated vehicle prototypes, this rickshaw and its development costs are drastically minimized, yet it uses standard software and hardware found in self-driving cars. Its reduced complexity allows for faster testing and development. Thanks to its compact size, the rickshaw can potentially operate on bike paths or in areas closed to cars, like city parks (e.g., Munich’s Englischer Garten). This opens up new possibilities for efficient and eco-friendly transport of people and goods in dense urban areas.

Walking outdoors can be challenging for blind people due to unexpected obstacles on sidewalks. While the white cane helps, it often doesn’t detect everything. SmartAIs is a user-friendly smartphone app that uses artificial intelligence, computer vision, and built-in phone sensors to scan the area in front of the user in real time. It identifies obstacles and safe paths. When something is detected, the app sends audio alerts through headphones—either as tones or spoken messages. The system runs entirely on the smartphone, which is carried safely in a specially designed chest bag.

The Future of Health

Many robots previously used in industrial settings are now being adapted to meet the more demanding challenges of the healthcare sector. How come? It is a reaction to an aging population and the shortage of healthcare professionals. Computer vision, machine learning, virtual and augmented reality, among other things, will take robotics to an entirely new level, thus enabling them to become true members of staff.

SHOWCASES HEALTH 2025

Our hands are essential for everything from grabbing objects and sensing our surroundings to communicating with others. When someone loses the fine motor skills of their arms or hands, it can be a major medical and emotional challenge. Modern prosthetic devices can help restore some abilities, but many users still find that today’s technologies don’t fully meet their needs.

At our booth, we’ll showcase new ideas for smarter and more intuitive prosthetic hands. Visitors can explore advanced robotic hands already on the market, experimental models made from soft materials, and cutting-edge ways of controlling prostheses using virtual reality. Our goal is to make prosthetic technology more responsive, natural, and useful in everyday life.

This next-generation powered prosthesis is designed to move more naturally and respond better to users' needs. It combines innovative features like flexible joint movement, adaptive interaction with objects, and gravity compensation to reduce effort. All components—motors, sensors, and a small onboard computer—are integrated into a compact design for real-time control during daily activities. The system can also be paired with a robotic hand to offer a complete arm solution.

At our booth, visitors can see live demonstrations showing how the prosthesis adapts to different tasks and grip strengths. The design focuses on comfort, functionality, and ease of use. By using cutting-edge robotics, this innovation supports future improvements and makes life easier for people using artificial limbs.

This demo shows how muscle signals from the arm (EMG) can be used to control a robotic arm with seven joints and a two-finger gripper. The robot follows pre-defined movement paths, while the user decides when and how to grasp objects using their muscle activity. Visitors will see the system handle different tasks—from picking up items of varying shapes to folding a piece of cloth and operating a hand-like robotic tool. This approach blends human intention with robotic precision. Our research aims to improve how EMG signals are interpreted, making the system more reliable, responsive, and easier to use. The goal is to create more natural and effective ways for humans to interact with robotic systems in everyday tasks.

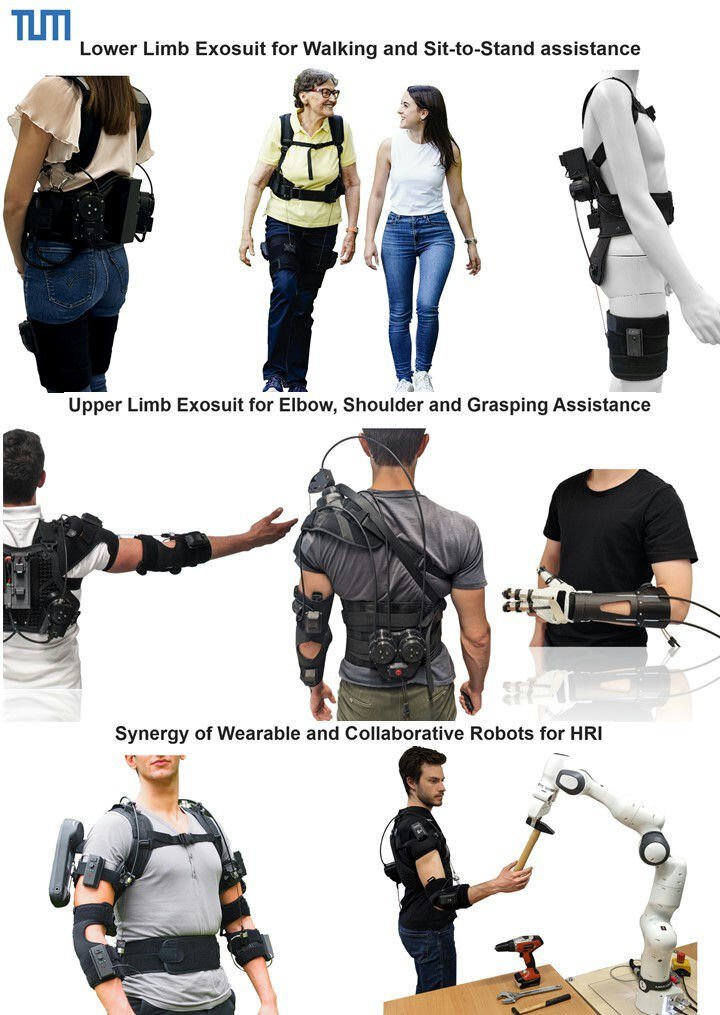

This demo presents soft, wearable exosuits that support and enhance natural body movement. These lightweight systems, made from fabric and powered by tendons, assist muscles during work and rehabilitation. Visitors will see different models in action: an elbow exosuit for arm support, a shoulder exosuit, a hip exosuit for easier walking, and an exoglove that helps move fingers and the wrist. These devices use real-time control and personalized movement models for smooth, natural motion. A collaborative robot will also show how humans and robots can work safely side by side. Whether in therapy or industry, these exosuits offer promising solutions to improve mobility, reduce strain, and boost performance in everyday tasks.

This demo shows how robots can help an elderly person in everyday tasks, especially when it’s hard to hold or reach multiple things at once. Using a camera, the system watches what a person is doing and automatically understands the activity with the help of computer vision. Then, the robot figures out which additional object is needed and picks it up with a robotic arm to hand it over. For example, if the person picks up a toothbrush, the robot will hand over the toothpaste.

Our demo presents an advanced human-robot collaboration system for assistive and general use. At automatica – Munich_i 2025, we showcase the updated GARMI: a humanoid robot that can adjust its height and physically interact with people. GARMI uses real-time planning, adaptive movement and grasping, and certified safety control to assist with daily tasks. It recognizes activities, predicts human actions, and responds accordingly. Technologies like speech understanding, vision-based perception, and digital twins allow the robot to understand tasks, perform handovers, and learn from demonstrations. This highlights TUM’s interdisciplinary work in assistive robotics, addressing labor shortages and improving safe and intuitive human-robot collaboration. Alongside the integrated demo, we will also feature contributions from various TUM chairs, showing the wide range of expertise behind this innovation.

Our demo shows the vision behind GARMI, a robot designed to support healthcare and daily life. Visitors will see GARMI perform tasks both autonomously and under remote human control. Key demonstrations include:

Remote Ultrasound: GARMI performs a live ultrasound scan controlled by a medical expert from afar.

Caregiving Tasks: The robot moves a medical trolley on its own, helping with transport and support in clinics.

Grasping Objects: GARMI picks up and handles different objects with care, showing fine motor control.

Telepresence Control: Using robotic arms, a remote user can control GARMI in real time—even with slight internet delays.

These demos highlight GARMI’s potential to assist in hospitals, homes, and underserved areas—bridging human care and robotic help.

In the SASHA-OR research project, an intelligent assistant robot is being developed to support surgical teams directly at the operating table. Designed for sterile environments, the robot helps manage instruments and materials during operations. Thanks to advanced touch sensors and computer vision, it can recognize tools, verbally interact with the surgical team, and safely hand over or receive laparoscopic instruments and sterile items.

Unmanned medical transport is shaping the future of rescue operations in dangerous or hard-to-reach areas. In the EU-funded iMEDCAP project, a medical strategy is being developed to provide care during transport without human personnel on board. One critical emergency is a tension pneumothorax—a rare but life-threatening condition. Normally, medical staff can treat it quickly. But during unmanned transport, it could be fatal. That’s why the world’s first robotic system for tension pneumothorax relief was created. This module can automatically insert a needle to relieve pressure in the chest, using real-time ultrasound guidance. It’s a key step toward saving lives when human help is not immediately available.

Access to meadical care is often limited in remote or underserved regions due to a lack of infrastructure and specialized staff. Our semiautonomous telerobotic examination suite aims to close this gap by enabling remote medical presence and high-precision diagnostics. Using advanced robotics and communication technologies, doctors can examine patients from afar while ensuring safety and quality. The system integrates various medical tools and is prepared for emergency scenarios. It also serves as a test platform for exploring the limits of today’s networks and the possibilities of future 6G technology.

This demo illustrates research and development in tele-surgery, where robotic systems can perform operations remotely. The platform features an open control structure that allows for flexible development and innovation. Surgeons receive real-time tactile feedback, giving them a better sense of touch while operating from a distance. The system also uses smart strategies to manage delays in communication and integrates AI assistants that help guide the surgery with shared or supervised control. Cutting-edge 5G and future 6G networks ensure fast and stable connections. To keep everything safe, a live monitoring security system protects against potential network disruptions.

The Future of Environment

Climate and environmental protection are among the most pressing issues of our time. How can AI and smart robots support coordinated efforts to tackle this major global challenge? Various applications are already being used in sustainable agriculture, environmental protection, and air quality measurement.

SHOWCASES ENVIRONMENT 2025

Our demo shows how robots-as-a-service can support environmental monitoring to help life scientists in their work. At the center is the SVan – a mobile hub that manages and deploys robots. Visitors can explore tasks like water quality checks or soil sampling, performed by a ground robot equipped with a robotic arm. This robot can operate both autonomously and with human input. A stationary drone provides an aerial view, showing how different types of robots can work together. We also showcase the BlueROV2, an underwater robot that can inspect and interact with the environment below the surface.

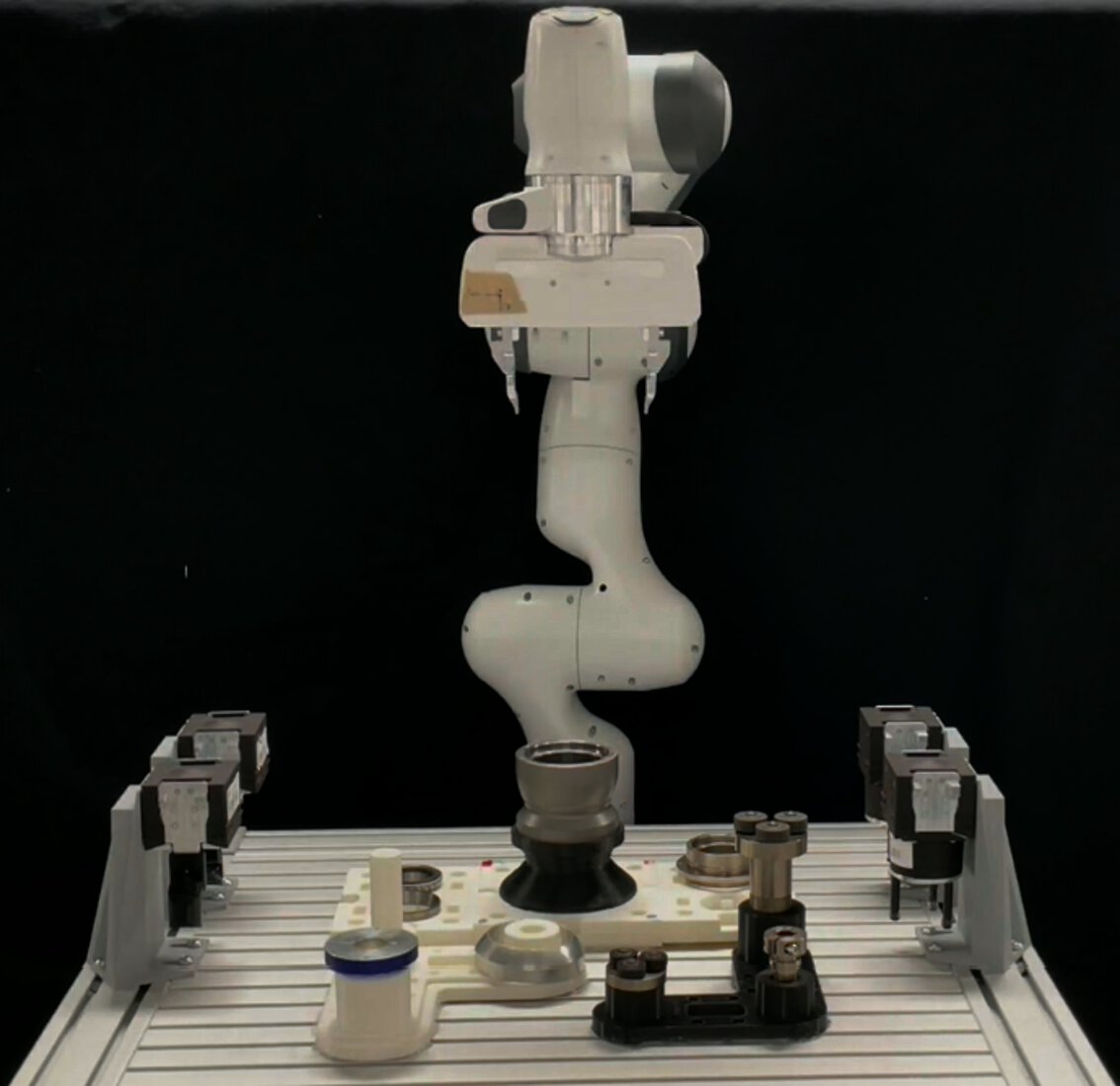

In this demo, we present the winning solution of the euROBIN Manipulation Skill Versatility Challenge using the Franka Panda robot and an electronic task board from the IROS 2024 conference. The demonstration shows how robots can perform complex tasks like identifying objects, inserting pegs, opening doors, wrapping cables, and probing electronic circuits. These skills are essential for flexible and intelligent automation. This demo complements the new Robothon Grand Challenge by showcasing a successful example from a past competition, offering insight into real-world robotics research. The tasks are executed by a robot from MIRMI using the solution developed by the TU Delft team.